"So, I have this shader that does normalmapping, and this other shader that does skinned animation. How can I use them both to render an animated normalmapped character?"

Welcome, my friend, to one of the fundamental unsolved problems of graphics programming...

It seems a reasonable expectation that if you have two effects which work separately, it should be easy to combine them, no? After all, that's exactly what happens in a program like Photoshop. I can add a drop shadow, then a blur, then apply a contrast adjustment, and everything Just Works™. Why not the same for GPU shaders?

To understand the problem, we need to understand the underlying programming model. An app like Photoshop has a very simple model:

This programming model is conceptually simple, mostly because it only deals with one data type. It is trivial to chain filters together when their input and output are the same type.

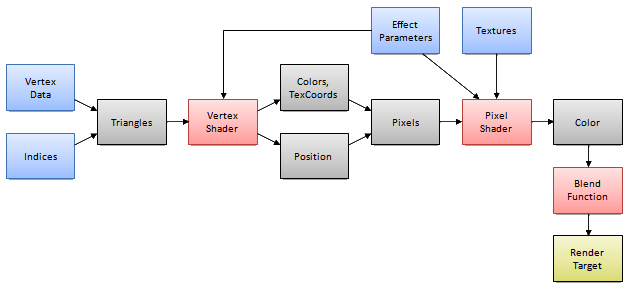

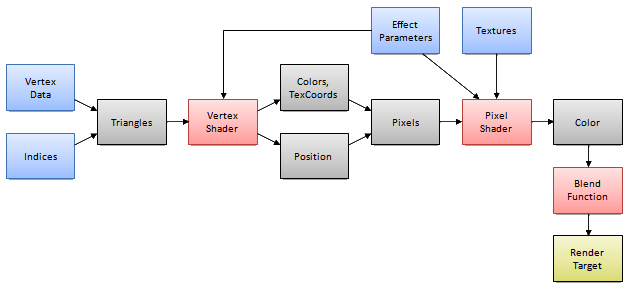

The GPU shader programming model is more flexible, and thus more complex. A slightly simplified diagram:

The blue boxes represent input data. Red are customizable processing operations, and yellow is the final output. Let's walk through what happens each time you draw something, starting at the left of the diagram and working to the right:

Yikes! Note that although the final output is a 2D bitmap (same as for a Photoshop filter), the input is a combination of vertex data, indices, effect parameters, and textures. The input and output types are not the same, which means there is no generalized way to pass the output from one shader as the input of another, and thus no way to automatically combine multiple shaders.

In fact the only universal way to combine two shaders is to understand how they work individually, then write a new shader that contains all the functionality you are interested in. This is the price we pay for flexibility. Because shader programs can do so many different things in so many different ways, the right way to merge them is different for every pair of shaders. For instance to use animation alongside normalmapping, it is necessary to animate the tangent vectors used by the normalmap computation, which requires changes to both the animation and normalmapping shader code.

However, there are specific cases in which Photoshop style layering is possible, if you impose extra constraints on the programming model by restricting all your shaders to work in a similar way:

In a previous job I designed a system for automatically combining shader fragments in more flexible ways than are possible using rendertargets or alpha blending. This worked well, but was complex both to implement and when adding new shader fragments, and there were still many things it could not handle.

So there you have it. Combining shaders turns out to be harder than you might expect, and is usually a manual process. But on the plus side, I guess this makes good job security for us shader programmers :-)