Any time we draw graphics using textures, we must figure out how to map one grid of pixels (the texture) onto a different grid of pixels (the screen). This process is called filtering.

The pixel mapping is trivial if:

In this case the source and destination pixel grids line up exactly, so pixel values can be copied directly across.

When the source and destination pixel grids do not line up, we must somehow compute new destination pixel values from the source data. In mathematical terms, you can think of this as a two step process:

Of course this isn't actually implemented as two stages: both parts are combined in a single computation.

It can sometimes also be useful to think of filtering as a choice between two different operations:

There are many ways this can be done. For instance when I resize an image in Paint.NET I can choose between three filtering modes: nearest neighbor, bilinear, or bicubic. Many algorithms are tuned to work with specific types of image, for instance hqx filtering gives great results when scaling up retro game graphics, but would be a terrible choice for digital photographs.

Realtime graphics hardware supports just four filtering modes:

These are specified by three SamplerState properties:

The attentive reader may wonder why the TextureFilter enum also includes GaussianQuad and PyramidalQuad options. Ignore these: they're a historical legacy. I guess someone once thought they might be useful, but I don't know any graphics cards that actually bother to implement them!

Note that these are just the built-in filtering modes. Any time a shader performs a texture lookup, the hardware automatically applies these filtering computations and returns a filtered value. If you want some other kind of filtering, you can implement it yourself in a shader by doing several texture lookups at slightly different locations to read the values of multiple source pixels, then applying your own filtering computation. This is more expensive than the built-in hardware filtering, but necessary if you want to use more advanced filters such as Gaussian blur or edge detection.

Point sampling uses a trivially simple filter function. For each destination pixel, it rounds to the closest matching location in the source pixel grid, then takes the value of that single source pixel. Implications:

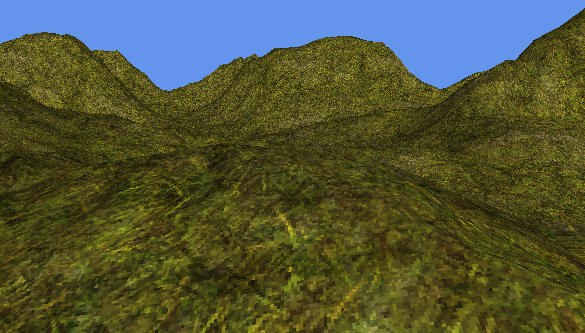

For example, here is a textured terrain using point sampling:

Note how the closest pixels (where the texture is magnified) are large and blocky, while the distant hills (where the texture is minified) look like they're covered in random green and brown noise as opposed to a proper texture! This noise looks especially ugly when the camera moves.

For each destination pixel, linear filtering works out the closest matching location in the source pixel grid. It then reads four pixel values from around this location, and interpolates between them. Implications:

The same terrain as above, using linear filtering:

Note how the closest pixels are smoothed out, and the mid ground is less noisy than with point sampling, but the distant hills still look pretty nasty. This is because with a maximum of four source samples per destination (two vertical and two horizontal), we can only shrink to half size before we have to start skipping some source pixels. Scaling to less than half size still causes the same aliasing as with point sampling.

We could fix this by averaging more than four source pixels, right? But sampling pixel values is expensive, and graphics cards have to balance the conflicting goals of high visual quality but also high performance. Linear filtering is a classic compromise: good enough to look great when used wisely, but still far from perfect.

Consider, for instance, this 3x3 image, scaled up using point sampling:

If I scale it up on my GPU using linear filtering, I get:

That's not horrible, but also not exactly beautiful. If I scale up the same image in Paint.NET using a bicubic filter, I get a much smoother result:

Paint.NET produces better results than my GPU because it uses a more sophisticated filter algorithm that examines more than four source pixels when computing each destination value.

Coming up: mipmaps, anisotropic filtering, and the challenges presented by alpha cutouts and sprite sheet borders...