Alpha cutouts (where you use the alpha channel of a texture to determine the shape of a sprite or billboard) are the cause of many an aliasing problem. There are basically two ways to do alpha cutouts, both tragically flawed.

As long as you start with high quality source textures, and draw them using bilinear filtering and mipmaps, alpha blended cutout textures can be pleasingly free of aliasing artifacts.

But alpha blending does not play nice with the depth buffer, and it can be a PITA to manually depth sort every piece of alpha cutout geometry!

In an attempt to make the hardware depth buffer work with alpha cutouts, many developers switch from full alpha blending to alpha testing (using the XNA AlphaTestEffect, or the clip() HLSL pixel shader intrinsic). This does indeed avoid the complexity of manually sorting alpha blended objects, but at the cost of aliasing.

The problem is that alpha testing only provides a boolean on/off decision, with no ability to draw fractional alpha values.

In mathematical terms, when we quantize a smoothly varying signal such as the alpha channel of a texture into a binary on/off decision, we are increasing the amount of high frequency data in our signal, which moves the Nyquist threshold in the wrong direction and hence increases aliasing.

Or I can avoid the math and try a common sense explanation:

We would normally antialias our source textures by including fractional values around shape borders, but alpha testing does not support fractional alpha values, so this is no longer possible.

We would normally avoid aliasing when resampling textures via bilinear filtering and mipmapping. But even if the original texture uses only on/off boolean alpha, when we filter between multiple texels, or scale it down to create 1/2, 1/4, and 1/8 size mip levels, these will inevitably end up with fractional alpha values. To use alpha testing, we must round all such values to either fully transparent or fully opaque, which entirely defeats the point of filtering and mipmapping.

We would normally avoid aliasing along the edges of triangle geometry by multisampling, but remember this only runs the pixel shader one per final output pixel. Since each group of multisamples gets a single alpha value from this single shader invocation, each multisample inevitably also has the same alpha test result, so multisampling is useless when it comes to smoothing our alpha cutout silhouette.

Yowser! Our entire arsenal of antialiasing techniques just vanished in a puff of smoke...

The only common antialiasing technique that works with alpha tested cutouts is supersampling. Which is great if you can afford it, but most games cannot.

Example time. Consider this image, taken from the XNA billboard sample, which uses full alpha blending with a two pass depth sorting technique:

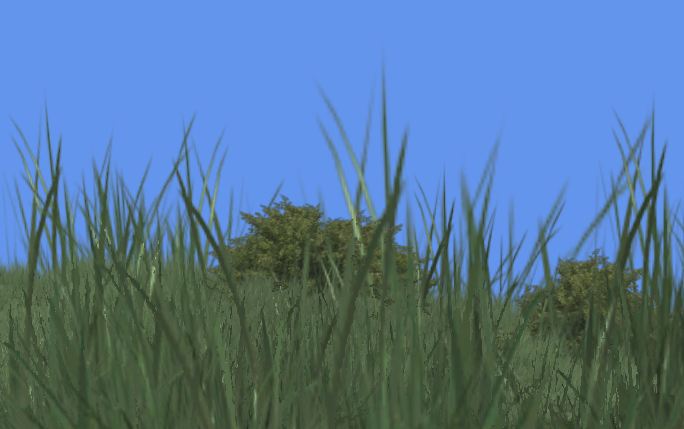

If I change the code to use alpha testing instead of blending, standard depth buffer sorting works with no more need for that expensive two pass technique, but at the cost of nasty aliasing on the thinner parts of the grass fronds:

How to fix this?

You don't. There are really just three choices for alpha cutouts:

In MotoGP we used a mixture of #2 and #3, and let the artists choose which was the lesser evil for each alpha cutout texture.